SK Hynix Samples 9 GT/s HBM3E: Up to 1.15 TB/s per Stack

SK Hynix said Monday that it finished the development of its first HBM3E memory modules and is now providing samples to its customers. The new memory stacks feature a data transfer rate of 9 GT/s, which exceeds the company’s HBM3 stacks by a whopping 40%.

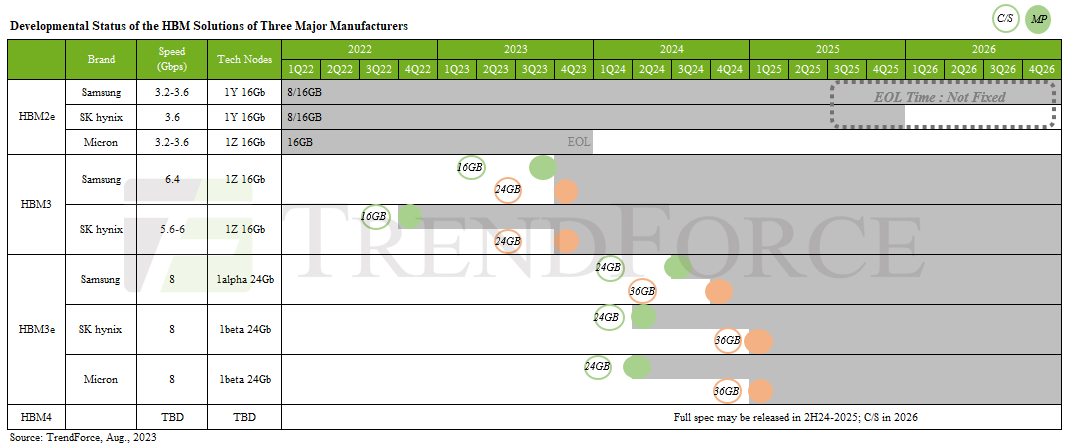

SK Hynix intends to mass-produce its new HBM3E memory stacks in the first half of next year. However, the company never disclosed the capacity of the modules (as well as whether they use 12-Hi or 8-Hi architecture) or when exactly it is set to make them available. Market intelligence firm TrendForce recently said that SK Hynix is on track to make 24 GB HBM3E products in Q1 2024 and follow up with 36 GB HBM3E offerings in Q1 2025.

If the information from TrendForce is correct, SK Hynix’s new HBM3E modules will arrive just in time when the market needs them. For example, Nvidia is set to start shipments of its Grace Hopper GH200 platform with 141 GB of HBM3E memory for artificial intelligence and high-performance computing applications in Q2 2024. While this does not mean that the Nvidia product is set to use SK Hynix’s HBM3E, mass production of HBM3E in the first half of 2024 strengthens SK Hynix’s standing as the leading supplier of HBM memory in terms of volume.

However, it won’t be able to take the performance crown. SK Hynix’s new modules offer a 9 GT/s data transfer rate, a touch slower than Micron’s 9.2 GT/s. While Micron’s HBM3 Gen2 modules promise a bandwidth of up to 1.2 TB/s per stack, SK Hynix’s peak at 1.15 TB/s.

Although SK Hynix refrains from revealing the capacity of its HBM3E stacks, it says that they employ its Advanced Mass Reflow Molded Underfill (MR-RUF) technology. This approach shrinks the space between memory devices within an HBM stack, which speeds up heat dissipation by 10% and allows cramming a 12-Hi HBM configuration into the same z-height as an 8-Hi HBM module.

One of the intriguing things about the mass production of HBM3E memory in the first half of 2024 by Micron and SK Hynix is that this new standard still has not been formally published by JEDEC. Perhaps, the demand for higher-bandwidth memory from AI and HPC applications is so high that the companies are somewhat rushing mass production to meet it.