AI Boom Sees Memory Makers Ramp Up HBM Memory Production: Report

Memory makers are ramping up production capacity for high-bandwidth memory (HBM) due to rapidly surging orders from cloud service providers (CSPs) and developers of processors for artificial intelligence (AI) and high-performance computing (HPC), such as Nvidia, according to TrendForce. The market research firm estimates that annual bit shipments of HBM will grow 105% by 2024.

TrendForce claims that leading DRAM producers like Micron, Samsung, and SK Hynix supplied enough HBM memory in 2022 to keep pricing predictable. Yet, a rapid increase in demand for AI servers in 2023 led to clients making early orders, pushing production limits. TrendForce, the market research firm now projects that aggressive supplier expansion will raise the HBM sufficiency ratio from -2.4% in 2022 to 0.6% in 2024.

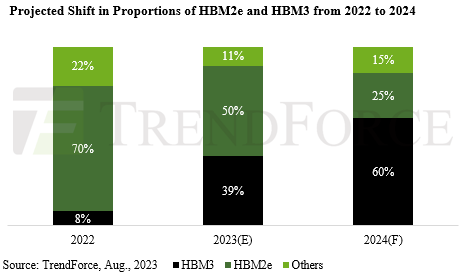

In addition to surging demand for HBM in general, analysts from TrendForce also note ongoing transition of demand from HBM2e (used on Nvidia’s H100 cards, AMD’s Instinct MI250X, and Intel’s Sapphire Rapids HBM and Ponte Vecchio products) to HBM3 (used on Nvidia’s H100 SXM modules, AMD’s upcoming Instinct MI300-series APUs and GPUs) The demand ratio for these is estimated at around 50% for HBM3 and 39% for HBM2e in 2023 and then HBM3 will represent 60% of shipped HBM in 2024. This burgeoning demand, coupled with a higher average selling price (ASP), is expected to significantly elevate HBM revenue in the coming year.

But to meet demand for HBM, makers of memory need to increase output of HBM. This is not particularly easy as in addition to making more memory devices, DRAM producers need to assemble these devices in 8-Hi or even 12-Hi stacks, which requires specialized equipment. To satisfy demand for HBM2, HBM2E, and HBM3 memory, DRAM makers need to procure additional tools to expand their HBM production lines and delivery and testing time for them is between 9 and 12 months, so tangible increase of HBM output is now expected sometimes in Q2 2024.

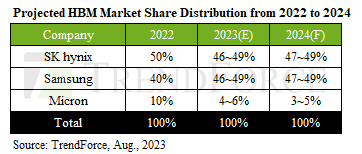

SK Hynix leads in HBM3 production with most of the output going to Nvidia, TrendForce claims, whereas Samsung mostly produces HBM2E for other processor developers and CSPs. Micron, which does not make HBM3, can only supply HBM2E (and Intel uses such memory on its Sapphire Rapids HBM processor, according to reports) while it is prepping to ramp up production of HBM3E (which the company calls HBM3 Gen2) in early 2024. Just yesterday Nvidia introduced the industry’s first HBM3E-based AI and HPC platform and given that demand for Nvidia’s products is overwhelming, it is likely that Micron will capitalize on the new GH200 Grace Hopper HBM3E-enabled platform.

Meanwhile, TrendForce anticipates that in 2023 ~ 2024 Samsung and SK Hynix will have nearly equal market shares, collectively comprising approximately 95%. By contrast, share of Micron is projected to be between 3% and 6%.

TrendForce observes a consistent decline of the average selling price for HBM products annually. To boost customer demand and respond to softening demand for previous-generation HBM types, suppliers are reducing HBM2e and HBM2 prices in 2023. Although 2024 pricing strategies remain undecided, there is potential for further price cuts for HBM2 and HBM2e due to increased HBM supply and suppliers’ ambitions to expand market share, according to TrendForce.

Nonetheless, HBM3 prices are expected to remain stable, and with its higher average selling price relative to HBM2e and HBM2, it could drive the HBM revenue to an impressive $8.9 billion in 2024, marking a 127% YoY growth.